AR Object Toolkit 2.0 – Augmented Reality For Shopping, Merchandising, Product Demos, and More

Retailing is one of the most challenging businesses out there, but it’s also one where technology can give a company a real competitive advantage. We’ve developed a set of next-generation AR tools that enables companies to place photorealistic digital 3d models of their products in any environment in real-time.

GrooveTech AR Object Toolkit 2.0

Visualization of photorealistic high-resolution renderings of products from various perspectives in all possible colors & materials is important. Some AR Object tools allow you to do this but fail to look realistic in the real environment. Our technology creates dynamic images that utilize the real environment lighting to create breathtaking and realistic images.

Our platform supports both Apple’s ARKit and Androids ARCore SLAM systems.

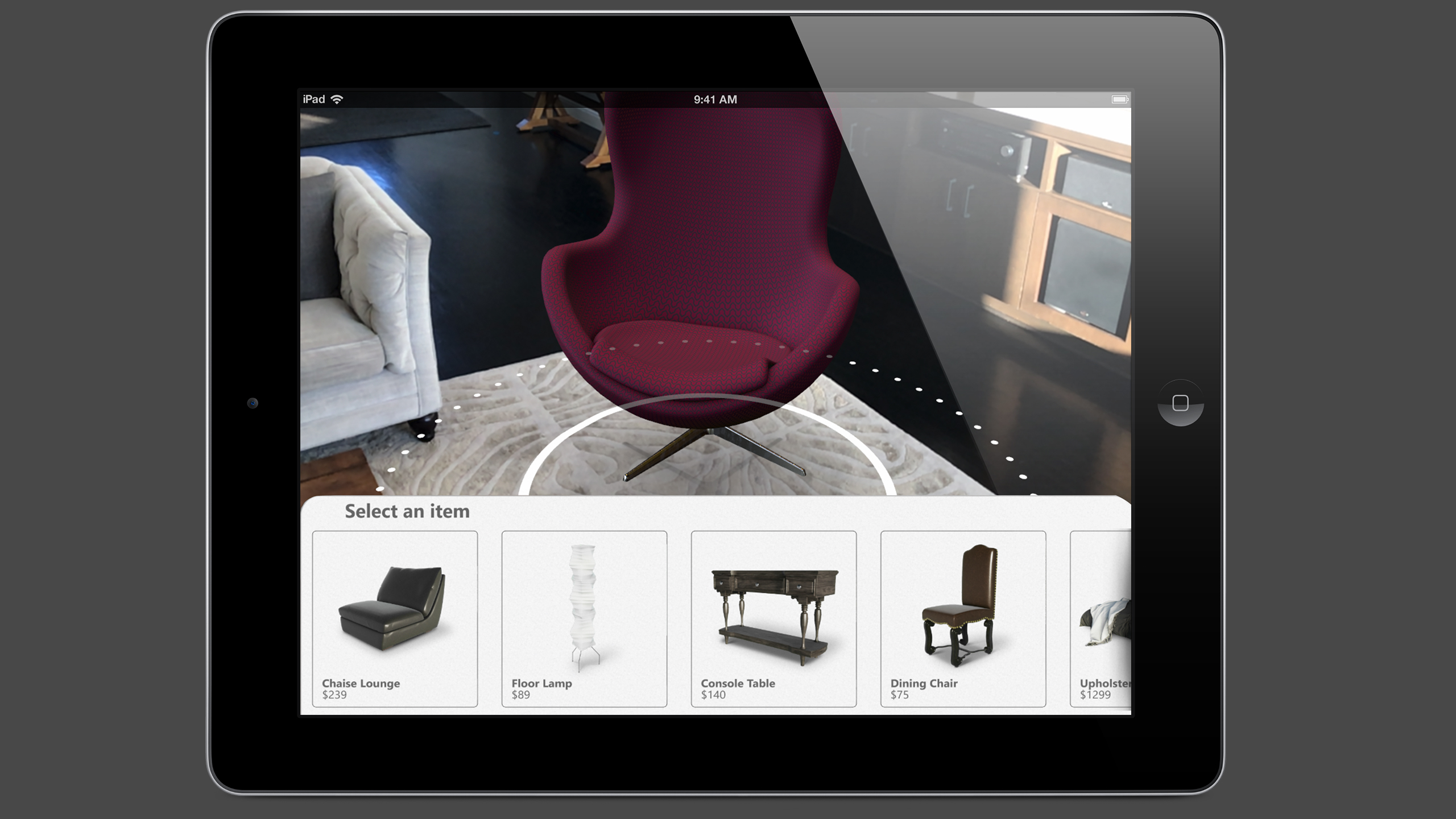

Customization at Your Finger Tips – Access Texture Library

The tools also allow for the customization of the SKUs while viewing in 3d. The technology allows the salesperson or end-user to customize geometry models in real-time, with an endless palette of color swatches or pattern textures. Providing astonishing versatility for any visual merchandising.

Our AR Object Toolkit displays the finest details down to the stitching on a piece of furniture. Dynamic lighting and shadows make an object look as if it was there.

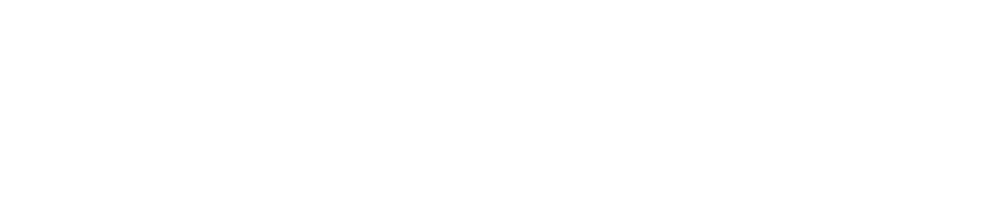

Any Object, Place Anywhere, in Real-Time using Augmented Reality

Our GrooveTech AR Object Toolkit turns raw data into beautiful visual experiences highlighting a product from any angle, in any color or texture, in any environment.

Objects can be modified by the user, creating endless opportunities for viewing in real-time in real space without the need for physical inventory. More complex items with multiple options can be configured as well.

Above is an overview of the controls for the toolkit that the user has access to with our App.

- Lighting and Color Calibration Controls – Gives the user access to a set of tools to dynamically modify the object

- Cubemap Color and Depth Presets – Presets to quickly modify color and depth lighting of the object

- 3d Object – The 3d CGI object

- Object Position Controls – Controls to move the item in the space. Scales to actual real-world environments

- Object Rotation Controls– Controls to rotate the item in the space without changing its position in the real-world environments

- Access Texture Library – Launches Texture Library

- Remove Object – Control to remove individual digital AR objects once placed

On the Spot Color Color Calibration and Adjustment

Our goal was to create a physically accurate lighting setup to allow us to place an object in augmented reality and have it look as realistic as possible. We also wanted to allow the viewer to be able to get very close to the materials, even to be able to see the actual threads in the fabric or the grain in wood.

We use the camera on the device to allow us to capture a light probe to give us real-world lighting information. We use this to give us color, intensity, and lighting direction. We couple this with HDR cube maps which give us detailed, high dynamic range lighting. The user has the ability to select from different cube maps to further enhance and customize their lighting conditions.

We’ve taken the step to add internal tools to help merchandisers or product managers make modifications to the 3D assets without the need of having to go back to an artist to make changes. These tools enable you to make modifications to the model data quickly and easily. Below is an example of the different color and depth presets that can be made to the textures for each model.

Ray-traced ambient occlusion shadows are rendered to further enhance the feeling of the object being truly in the room. Below is an example of being able to see the actual threads.

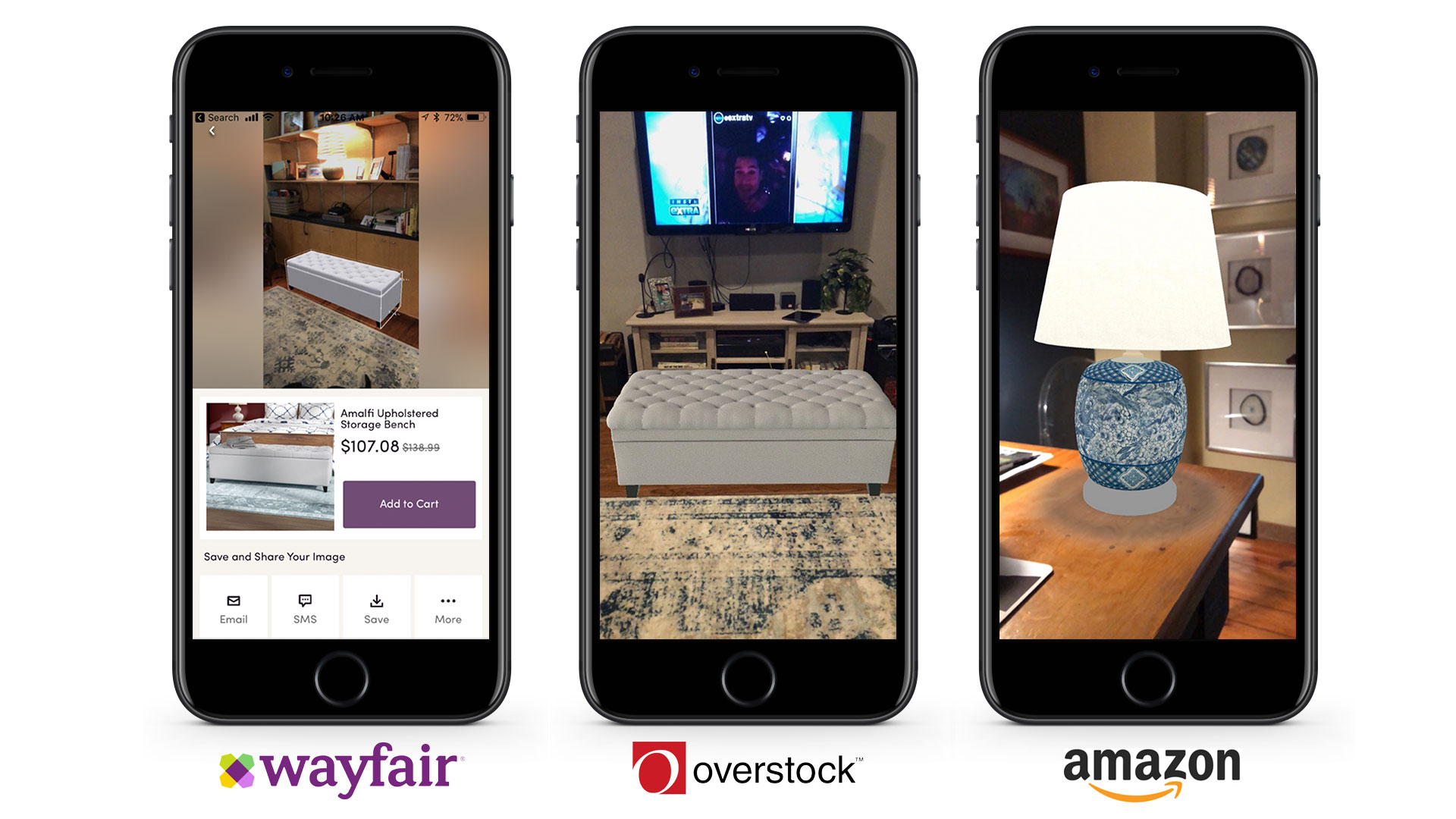

AR Can Help Stop Buyer Uncertainty

Because we can place accurately sized computer-generated objects within a user’s real world, we can reduce a buyer’s uncertainty during the shopping experience. Because it is digital, we can also give customers the ability to customize any object in their environment.

Companies like Ikea, Lowes, Overstock, Amazon, Pottery Barn, Target. Wayfair and Ashley Homestore are using AR for their retail experience. Most have released minimum viable product AR demonstrations, some less successful than others. Our AR Object Toolkit delivers a full set of tools that enhance the overall experience.

Augmented Reality success is when the user is compelled to reach out and touch the virtual gas range, sit in the virtual chair or jump onto the virtual bed.

Because we can place accurately sized computer-generated objects within a user’s real world, we can reduce a buyer’s uncertainty during the shopping experience. We can also give customers the ability to customize any object in their environment – something the major retailers have not done yet.

AR Object Visualizer Process – Why Our AR Objects Look Better Than Others

Taking physical objects in our real world and turning them into digital copies of themselves is a complex task. The basic idea is similar to taking a photograph, or rather a multi-dimensional photograph. This becomes vastly more complex as we are capturing not just an image but also depth and dimension, fine detail that will enable us to control the lighting of the object – all of which are necessary to create amazing virtual copies.

The capture process involves various techniques depending on the object’s size and material qualities.

We use a combination of photo capture, scanning, and artist reconstruction. Our proprietary process is highly proceduralized for efficiency, focusing on visual quality and fidelity.

One of the significant advantages of the Groove Jones capture tech is that we can use photo capture yet still extract the diffuse and specular lighting properties giving us the best of both worlds, achieving photographic quality and interactive lighting. We want the objects to look real. We don’t want the illumination “baked in” and, therefore not interactive as our viewers move around the object.

Most capture processes have to choose one or the other. Either the lighting is interactive, enhancing the look with the improved 3D effect, but then it looks like it belongs in a game. Then the other typical scenario is where the object’s lighting is “baked in.” So now the object looks like a photograph, but it doesn’t look like you could reach out and touch it or “feel like it is there” – and feeling like it is there is most important.

Our approach to Digital Asset Creations

- Asset Setup and Fabrication

- Capture

- Process

- Optimize

- Post for Review

- Publish for App

Want to Learn More? We can help!

Interested in Learning More? Let's get you connected to one of our Digital Strategists.

Interested in Learning More? Let's get you connected to one of our Digital Strategists.

Other similar articles –